CUBRID HA¶

High Availability (HA) refers to a feature to provide uninterrupted service in the event of hardware, software, or network failure. This ability is a critical element in the network computing area where services should be provided 24/7. An HA system consists of more than two server systems, each of which provides uninterrupted services, even when a failure occurs in one of them.

CUBRID HA is an implementation of High Availability. CUBRID HA ensures database synchronization among multiple servers when providing service. When an unexpected failure occurs in the system which is operating services, this feature minimizes the service down time by allowing the other system to carry out the service automatically.

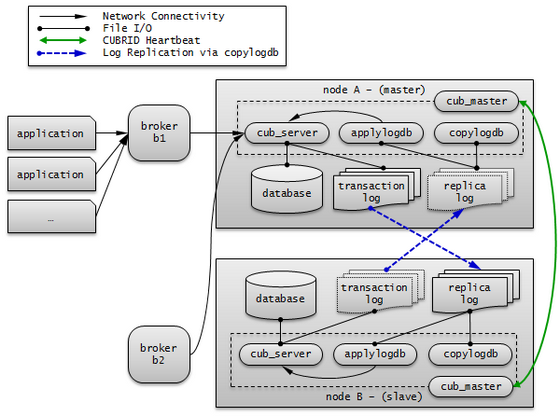

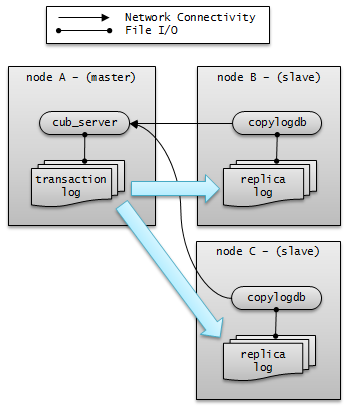

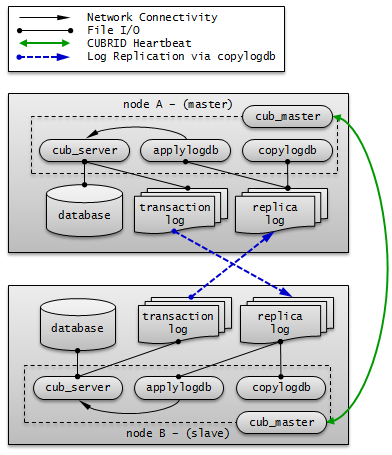

CUBRID HA is in a shared-nothing structure. To synchronize data from an active server to a standby server, CUBRID HA executes the following two steps.

- Transaction log multiplexing: Replicates the transaction logs created by an active server to another node in real time.

- Transaction log reflection: Analyzes replicated transaction logs in real time and reflects the data to a standby server.

CUBRID HA executes the steps described above in order to always maintain data synchronization between an active server and a standby server. For this reason, if an active server is not working properly because of a failure occurring in the master node that had been providing service, the standby server of the slave node provides service instead of the failed server. CUBRID HA monitors the status of the system and CUBRID in real time. It uses heartbeat messages to execute an automatic failover when a failure occurs.

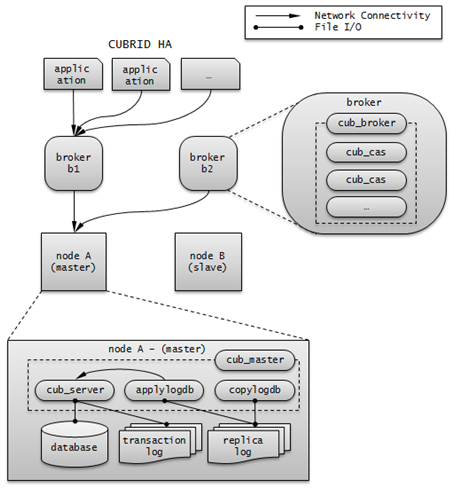

CUBRID HA Concept¶

Nodes and Groups¶

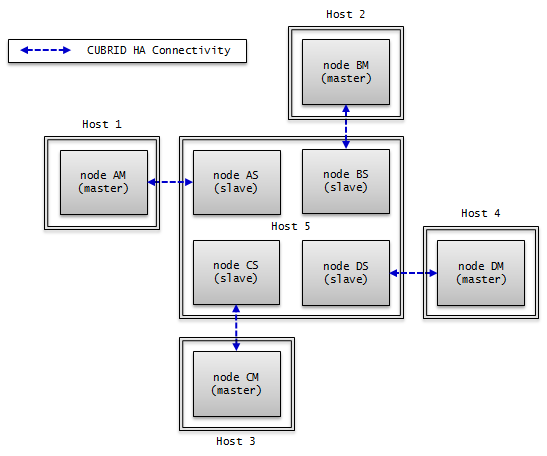

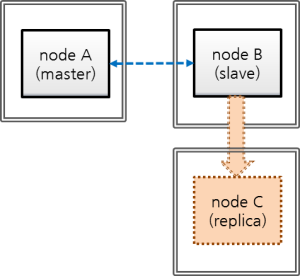

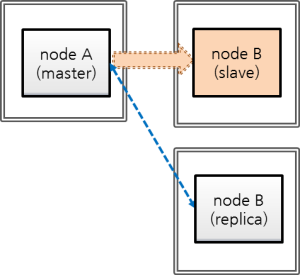

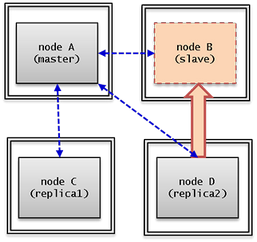

A node is a logical unit that makes up CUBRID HA. It can become one of the following nodes according to its status: master node, slave node, or replica node.

- Master node : A node to be replicated. It provides all services which are read, write, etc. using an active server.

- Slave node : A node that has the same information as a master node. Changes made in the master node are automatically reflected to the slave node. It provides the read service using a standby server, and a failover will occur when the master node fails.

- Replica node : A node that has the same information as a master node. Changes made in the master node are automatically reflected to the replica node. It provides the read service using a standby server, and no failover will occur when the master node fails.

The CUBRID HA group consists of the nodes described above. You can configure the members of this group by using the ha_node_list and ha_replica_list in the cubrid_ha.conf file. Nodes in a group have the same information. They exchange status checking messages periodically and a failover will occurs when the master node fails.

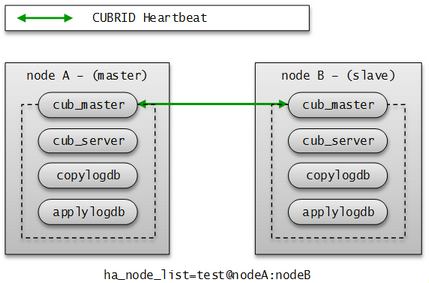

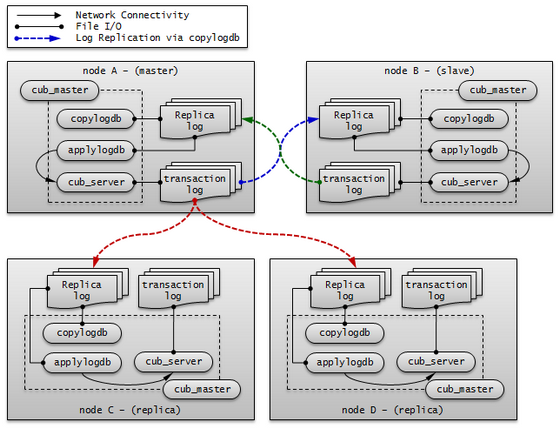

A node includes the master process (cub_master), the database server process (cub_server), the replication log copy process (copylogdb), the replication log reflection process (applylogdb), etc.

Processes¶

A CUBRID HA node consists of one master process (cub_master), one or more database server processes (cub_server), one or more replication log copy processes (copylogdb), and one or more replication log reflection processes (applylogdb). When a database is configured, database server processes, replication log copy processes, and replication log reflection processes will start. Because copy and reflection of a replication log are executed by different processes, the delay in replicating reflections does not affect the transaction that is being executed.

- Master process (cub_master) : Exchanges heartbeat messages to control the internal management processes of CUBRID HA.

- Database server process (cub_server) : Provides services such as read or write to the user. For details, see Servers.

- Replication log copy process (copylogdb) : Copies all transaction logs in a group. When the replication log copy process requests a transaction log from the database server process of the target node, the database server process returns the corresponding log. The location of copied transaction logs can be configured in the ha_copy_log_base of cubrid_ha.conf. Use cubrid applyinfo utility to verify the information of copied replication logs. The replication log copy process has following two modes: SYNC and ASYNC. You can configure it with the ha_copy_sync_mode of cubrid_ha.conf. For details on these modes, see Log Multiplexing.

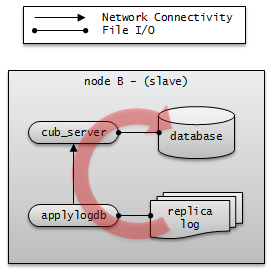

- Replication log reflection process (applylogdb) : Reflects the log that has been copied by the replication log copy process to a node. The information of reflected replications is stored in the internal catalog (db_ha_apply_info). You can use the cubrid applyinfo utility to verify this information.

Servers¶

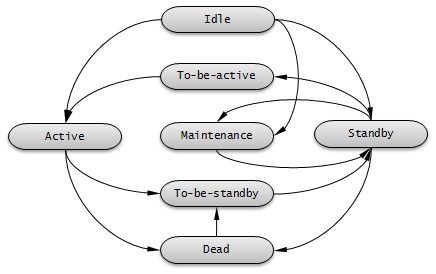

Here, the word "server" is a logical representation of database server processes. Depending on its status, a server can be either an active server or a standby server.

- Active server : A server that belongs to a master node; the status is active. An active server provides all services, including read, write, etc. to the user.

- Standby server : A standby server that belongs to a non-master node; the status is standby. A standby server provides only the read service to the user.

The server status changes based on the status of the node. You can use the cubrid changemode utility to verify server status. The maintenance mode exists for operational convenience and you can change it by using the cubrid changemode utility.

- active : The status of servers that run on a master node is usually active. In this status, all services including read, write, etc. are provided.

- standby : The status of servers that run on a slave node or a replica node is standby. In this status, only the read service is provided.

- maintenance : The status of servers can be manually changed for operational convenience is maintenance. In this status, only a csql can access and no service is provided to the user.

- to-be-active : The status in which a standby server will become active for reasons such as failover, etc. is to-be-active. In this status, servers prepare to become active by reflecting transaction logs from the existing master node to its own server. The node in this status can accept only SELECT query.

- Other : This status is internally used.

When the node status is changed, on cub_master process log and cub_server process log, following error messages are saved. But, they are saved only when the value of error_log_level in cubrid.conf is error or less.

The following log information of cub_master process is saved on $CUBRID/log/<hostname>_master.err file.

HA generic: Send changemode request to the server. (state:1[active], args:[cub_server demodb ], pid:25728). HA generic: Receive changemode response from the server. (state:1[active], args:[cub_server demodb ], pid:25728).

The following log information of cub_server is saved on $CUBRID/log/server/<db_name>_<date>_<time>.err file.

Server HA mode is changed from 'to-be-active' to 'active'.

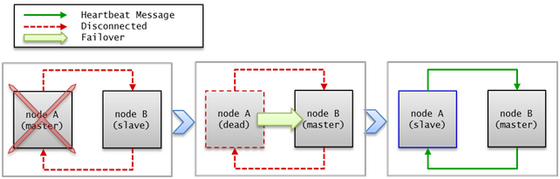

heartbeat Message¶

As a core element to provide HA, it is a message exchanged among master, slave, and replica nodes to monitor the status of other nodes. A master process periodically exchanges heartbeat messages with all other master processes in the group. A heartbeat message is exchanged through the UDP port configured in the ha_port_id parameter of cubrid_ha.conf. The exchange interval of heartbeat messages is determined by an internally configured value.

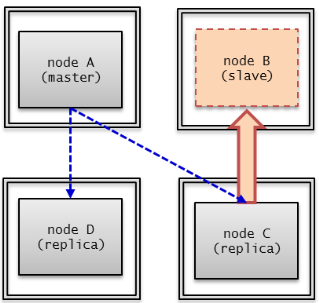

When the master node fails, a failover occurs to a slave node.

failover and failback¶

A failover means that the highest priority slave node automatically becomes a new master node when the original master node fails to provide services due to a failure. A master process calculates scores for all nodes in the CUBRID HA group based on the collected information, promotes slave nodes to master modes when it is necessary, and then notifies the management process of the changes it has made.

A failback means that the previously failed master node automatically becomes a master node back after the failure node is restored. The CUBRID HA does not currently support this functionality.

If a heartbeat message fails to deliver, a failover will occur. For this reason, servers with unstable connection may experience failover even though no actual failures occur. To prevent a failover from occurring in the situation described above, configure ha_ping_ports. Configuring ha_ping_ports will send a ping message to a node specified in ha_ping_ports in order to verify whether the network is stable or not when a heartbeat message fails to deliver. For details on configuring ha_ping_ports, see cubrid_ha.conf.

Broker Mode¶

A broker can access a server with one of the following modes: Read Write, Read Only or Standby Only. This configuration value is determined by a user.

A broker finds and connects to a suitable DB server by trying to establish a connection in the order of DB server connections; this is, if it fails to establish a connection, it tries another connection to the next DB server defined until it reaches the last DB server. If no connection is made even after trying all servers, the broker fails to connect to a DB server.

For details on how to configure broker mode, see cubrid_broker.conf.

DB connection is affected by PREFERRED_HOSTS, CONNECT_ORDER and MAX_NUM_DELAYED_HOSTS_LOOKUP parameters in cubrid_broker.conf. See Connecting a Broker to DB for further information.

The below is the description if the above parameters are not specified.

Read Write

"ACCESS_MODE=RW"

A broker that provides read and write services. This broker is usually connected to an active server. If there is no active server, this broker will be connected to a standby server temporarily. Therefore, a Read Write broker can be temporarily connected to a standby server.

When the broker temporarily establishes a connection to a standby server, it will disconnect itself from the standby server at the end of every transaction so that it can attempt to find an active server at the beginning of the next transaction. When it is connected to the standby server, only read service is available. Any write requests will result in a server error.

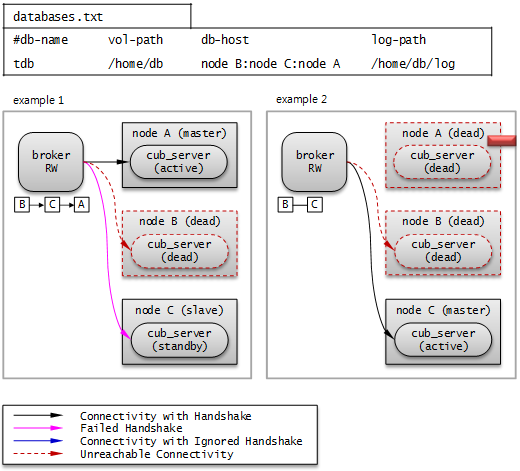

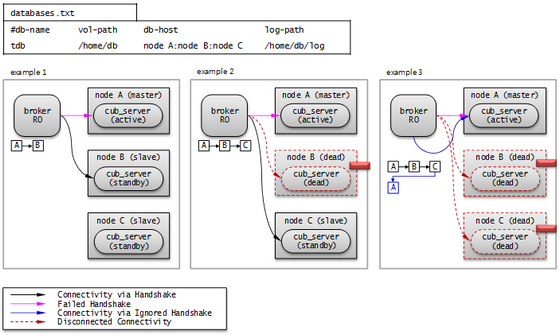

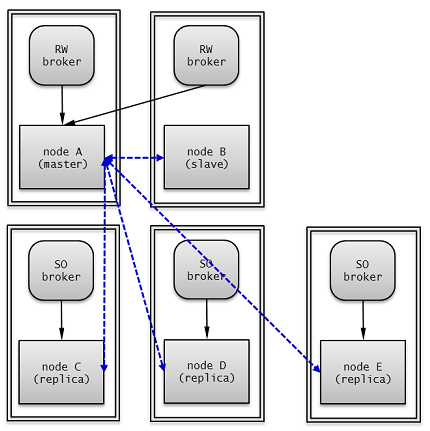

The following picture shows how a broker connects to the host through the db-host configuration.

The broker tries to connect as the order of B, C, A because db-host in databases.txt is "node B:node C:node A". At this time, "node B:node C:node A" specified in db-host is the real host names defined in the /etc/hosts file.

- Example 1. node B is crashed, node C is in standby status, and node A is in active status. Therefore, at last, the broker connects to node A.

- Example 2. node B is crashed, and node C is in active status. Therefore, at last, the broker connects to node C.

Read Only

"ACCESS_MODE=RO"

A broker that provides the read service. This broker is connected to a standby server if possible. Therefore, the Read Only broker can be connected to an active server temporarily.

Once it establishes a connection with an active server, it will maintain that connection until the time specified by RECONNECT_TIME. After RECONNECT_TIME, the broker tries to reconnect as disconnecting the old connection. Or you can reconnect to the standby server by running cubrid broker reset. If a write request is delivered to the Read Only broker, an error occurs in the broker; therefore, only the read service will be available even if it is connected to an active server.

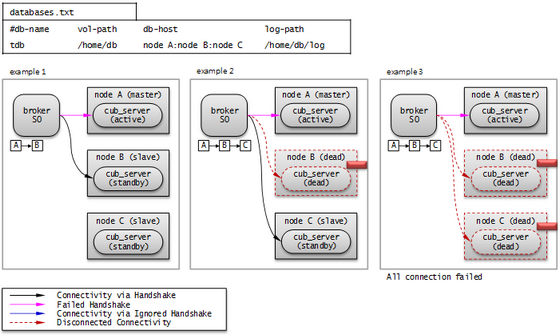

The following picture shows how a broker connects to the host through the db-host configuration.

The broker tries to connect as the order of A, B, C because db-host in databases.txt is "node A:node B:node C". At this time, "node A:node B:node C" specified in db-host is the real host names defined in the /etc/hosts file.

- Example 1. node A is in active status, node B is in standby status. Therefore, at last, the broker connects to node B.

- Example 2. node A is in active status, node B is crashed, and node C is in standby status. Therefore, at last, the broker connects to node C.

- Example 3. node A is in active status, node B and node C are crashed. Therefore, at last, the broker connects to node A.

Standby Only

"ACCESS_MODE=SO"

A broker that provides the read service. This broker can only be connected to a standby server. If no standby server exists, no service will be provided.

The following picture shows how a broker connects to the host through the db-host configuration.

The broker tries to connect as the order of A, B, C because db-host in databases.txt is "node A:node B:node C". At this time, "node A:node B:node C" specified in db-host is the real host names defined in the /etc/hosts file.

- Example 1. node A is in active status, node B is in standby status. Therefore, at last, the broker connects to node B.

- Example 2. node A is in active status, node B is crashed, and node C is in standby status. Therefore, at last, the broker connects to node C.

- Example 3. node A is in active status, node B and node C are crashed. Therefore, at last, the broker does not connect to any node. This is the difference with Read Only broker.

CUBRID HA Features¶

Duplexing Servers¶

Duplexing servers is building a system by configuring duplicate hardware equipment to provide CUBRID HA. This method will prevent any interruptions in a server in case of occurring a hardware failure.

Server failover

A broker defines server connection order and connects to a server according to the defined order. If the connected server fails, the broker connects to the server with the next highest priority. This requires no processing in the application side. The actions taken when the broker connects to another server may differ according to the current mode of the broker. For details on the server connection order and configuring broker mode, see cubrid_broker.conf.

Server failback

CUBRID HA does not automatically support server failback. Therefore, to manually apply failback, restore the master node that has been abnormally terminated and run it as a slave node, terminate the node that has become the master from the slave due to failover, and finally, change the role of each node again.

For example, when nodeA is the master and nodeB is the slave, nodeB becomes the master and nodeA becomes the slave after a failover. After terminating nodeB (cubrid heartbeat stop) check (cubrid heartbeat status) whether the status of nodeA has become active. Start (cubrid heartbeat start) nodeB and it will become the slave.

Duplexing Brokers¶

As a 3-tier DBMS, CUBRID has middleware called the broker which relays applications and database servers. To provide HA, the broker also requires duplicate hardware equipment. This method will prevent any interruptions in a broker in case of occurring a hardware failure.

The configuration of broker redundancy is not determined by the configuration of server redundancy; it can be user-defined. In addition, it can be separated by piece of individual equipment.

To use the failover and failback functionalities of a broker, the altHosts attribute must be added to the connection URL of the JDBC, CCI, or PHP. For a description of this, see JDBC Configuration, CCI Configuration and PHP Configuration.

To set a broker, configure the cubrid_broker.conf file. To set the order of failovers of a database server, configure the databases.txt file. For more information, see Configuring and Starting Broker, and Verifying the Broker Status.

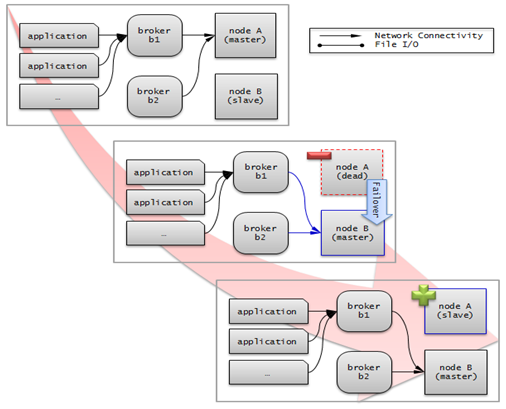

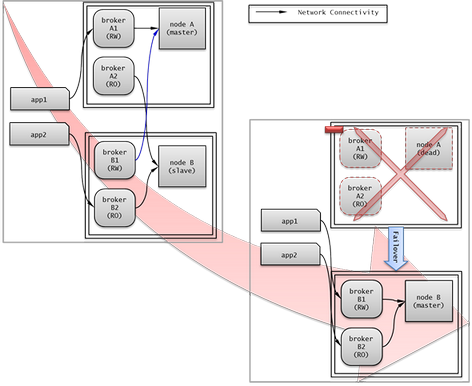

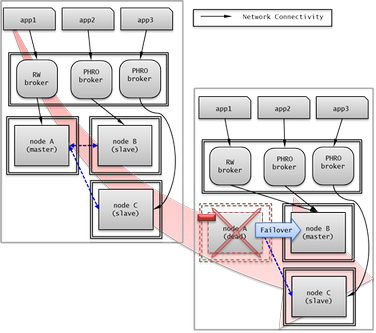

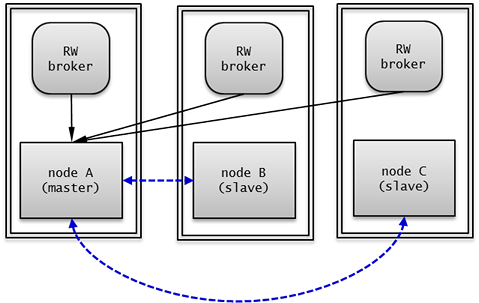

The following is an example in which two Read Write (RW) brokers are configured. When the first connection broker of the application URL is set to broker B1 and the second connection broker to broker B2, the application connects to broker B2 when it cannot connect to broker B1. When broker B1 becomes available again, the application reconnects to broker B1.

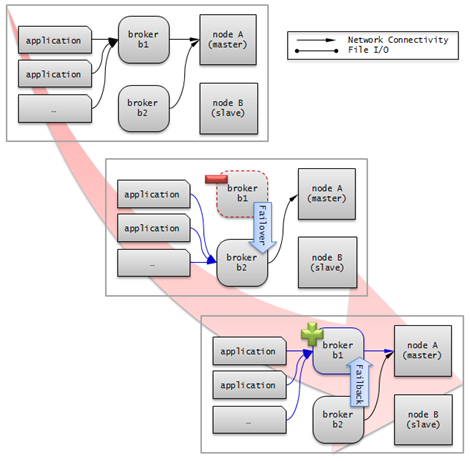

The following is an example in which the Read Write (RW) broker and the Read Only (RO) broker are configured in each piece of equipment of the master node and the slave node. First, the app1 and the app2 URL connect to broker A1 (RW) and broker B2 (RO), respectively. The second connection (altHosts) is made to broker A2 (RO) and broker B1 (RW). When equipment that includes nodeA fails, app1 and the app2 connect to the broker that includes nodeB.

The following is an example of a configuration in which broker equipment includes one Read Write broker (master node) and two Preferred Host Read Only brokers (slave nodes). The Preferred Host Read Only brokers are connected to nodeB and nodeC to distribute the reading load.

Broker failover

The broker failover is not automatically failed over by the settings of system parameters. It is available in the JDBC, CCI, and PHP applications only when broker hosts are configured in the altHosts of the connection URL. Applications connect to the broker with the highest priority. When the connected broker fails, the application connects to the broker with the next highest priority. Configuring the altHosts of the connection URL is the only necessary action, and it is processed in the JDBC, CCI, and PHP drivers.

Broker failback

If the failed broker is recovered after a failover, the connection to the existing broker is terminated and a new connection is established with the recovered broker which has the highest priority. This requires no processing in the application side as it is processed within the JDBC, CCI, and PHP drivers. Execution time of failback depends on the value configured in JDBC connection URL. For details, see JDBC Configuration.

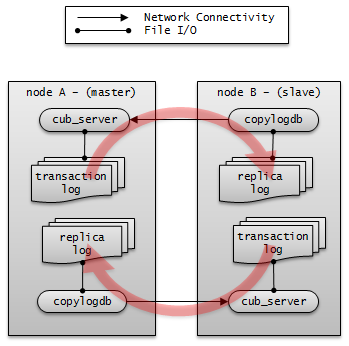

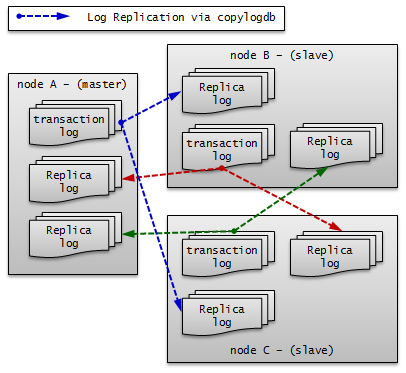

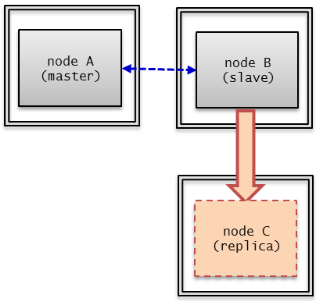

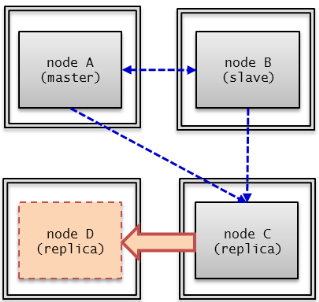

Log Multiplexing¶

CUBRID HA keeps every node in the CUBRID HA group with the identical structure by copying and reflecting transaction logs to all nodes included in the CUBRID HA group. As the log copy structure of CUBRID HA is a mutual copy between the master and the slave nodes, it has a disadvantage of increasing the size of a log volume. However, it has an advantage of flexibility in terms of configuration and failure handling, comparing to the chain-type copy structure.

The transaction log copy modes include SYNC and ASYNC. This value can be configured by the user in cubrid_ha.conf file.

SYNC Mode

When transactions are committed, the created transaction logs are copied to the slave node and stored as a file. The transaction commit is complete after receiving a notice on its success. Although the time to execute commit in this mode may take longer than that in ASYNC mode, this is the safest method because the copied transaction logs are always guaranteed to be reflected to the standby server even if a failover occurs.

ASYNC Mode

When transactions are committed, commit is complete without verifying the transfer of transaction logs to a slave node. Therefore, it is not guaranteed that committed transactions are reflected to a slave node in a master node side.

Although ASYNC mode provides a better performance as it has almost no delay when executing commit, there may be data inconsistency in its nodes.

Note

SEMISYNC mode which has existed from the previous versions operates in the same way as SYNC mode.

Quick Start¶

This chapter simply explains how to build a master node and a slave node as 1:1 from DB creation. For details of various replication building methods, see Building Replication.

Preparation¶

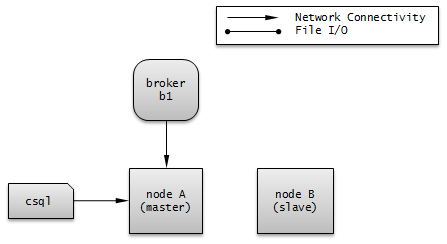

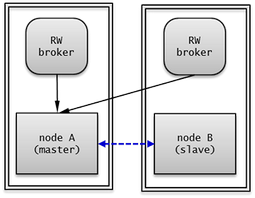

Structure Diagram

The diagram below aims to help users who are new to CUBRID HA, by explaining a simple procedure of the CUBRID HA configuration.

Specifications

Linux and CUBRID version 2008 R2.2 or later must be installed on the equipment to be used as the master and the slave nodes. CUBRID HA does not support Windows operating system.

Specifications of Configuring the CUBRID HA Equipment

| CUBRID Version | OS | |

|---|---|---|

| For master nodes | CUBRID 2008 R2.2 or later | Linux |

| For slave nodes | CUBRID 2008 R2.2 or later | Linux |

Note

This document describes the HA configuration in CUBRID 9.2 or later versions. Note that the previous versions have different settings. For example, cubrid_ha.conf is only available in CUBRID 2008 R4.0 or later. ha_make_slavedb.sh is introduced from CUBRID 2008 R4.1 Patch 2 or later.

Creating Databases and Configuring Servers¶

Creating Databases

Create databases to be included in CUBRID HA at each node of the CUBRID HA in the same manner. Modify the options for database creation as needed.

[nodeA]$ cd $CUBRID_DATABASES

[nodeA]$ mkdir testdb

[nodeA]$ cd testdb

[nodeA]$ mkdir log

[nodeA]$ cubrid createdb -L ./log testdb en_US

Creating database with 512.0M size. The total amount of disk space needed is 1.5G.

CUBRID 9.3

[nodeA]$

cubrid.conf

Ensure ha_mode of $CUBRID/conf/cubrid.conf in every CUBRID HA node has the same value. Especially, take caution when configuring the log_max_archives and force_remove_log_archives parameters (logging parameters) and the ha_mode parameter (HA parameter).

# Service parameters

[service]

service=server,broker,manager

# Common section

[common]

service=server,broker,manager

# Server parameters

server=testdb

data_buffer_size=512M

log_buffer_size=4M

sort_buffer_size=2M

max_clients=100

cubrid_port_id=1523

db_volume_size=512M

log_volume_size=512M

# Adds when configuring HA (Logging parameters)

log_max_archives=100

force_remove_log_archives=no

# Adds when configuring HA (HA mode)

ha_mode=on

cubrid_ha.conf

Ensure ha_port_id, ha_node_list, ha_db_list of $CUBRID/conf/cubrid_ha.conf in every CUBRID HA node has the same value. In the example below, we assume that the host name of a master node is nodeA and that of a slave node is nodeB.

[common]

ha_port_id=59901

ha_node_list=cubrid@nodeA:nodeB

ha_db_list=testdb

ha_copy_sync_mode=sync:sync

ha_apply_max_mem_size=500

databases.txt

Ensure that you must configure the host names (nodeA:nodeB) of master and slave nodes in db-host of $CUBRID_DATABASES/databases.txt; if $CUBRID_DATABASES is not configured, do it in $CUBRID/databases/databases.txt).

#db-name vol-path db-host log-path lob-base-path

testdb /home/cubrid/DB/testdb nodeA:nodeB /home/cubrid/DB/testdb/log file:/home/cubrid/DB/testdb/lob

Starting and Verifying CUBRID HA¶

Starting CUBRID HA

Execute the cubrid heartbeat start at each node in the CUBRID HA group. Note that the node executing cubrid heartbeat start first will become a master node. In the example below, we assume that the host name of a master node is nodeA and that of a slave node is nodeB.

Master node

[nodeA]$ cubrid heartbeat start

Slave node

[nodeB]$ cubrid heartbeat start

Verifying CUBRID HA Status

Execute cubrid heartbeat status at each node in the CUBRID HA group to verify its configuration status.

[nodeA]$ cubrid heartbeat status

@ cubrid heartbeat list

HA-Node Info (current nodeA-node-name, state master)

Node nodeB-node-name (priority 2, state slave)

Node nodeA-node-name (priority 1, state master)

HA-Process Info (nodeA 9289, state nodeA)

Applylogdb testdb@localhost:/home1/cubrid1/DB/testdb_nodeB.cub (pid 9423, state registered)

Copylogdb testdb@nodeB-node-name:/home1/cubrid1/DB/testdb_nodeB.cub (pid 9418, state registered)

Server testdb (pid 9306, state registered_and_active)

[nodeA]$

Use the cubrid changemode utility at each node in the CUBRID HA group to verify the status of the server.

Master node

[nodeA]$ cubrid changemode testdb@localhost The server 'testdb@localhost''s current HA running mode is active.

Slave node

[nodeB]$ cubrid changemode testdb@localhost The server 'testdb@localhost''s current HA running mode is standby.

Verifying the CUBRID HA Operation

Verify that action is properly applied to standby server of the slave node after performing write in an active server of the master node. To make a success connection via the CSQL Interpreter in HA environment, you must specify the host name to be connected after the database name like "@<host_name>"). If you specify a host name as localhost, it is connected to local node.

Warning

Ensure that primary key must exist when creating a table to have replication successfully processed.

Master node

[nodeA]$ csql -u dba testdb@localhost -c "create table abc(a int, b int, c int, primary key(a));" [nodeA]$ csql -u dba testdb@localhost -c "insert into abc values (1,1,1);" [nodeA]$

Slave node

[nodeB]$ csql -u dba testdb@localhost -l -c "select * from abc;" === <Result of SELECT Command in Line 1> === <00001> a: 1 b: 1 c: 1 [nodeB]$

Configuring and Starting Broker, and Verifying the Broker Status¶

Configuring the Broker

To provide normal service during a database failover, it is necessary to configure an available database node in the db-host of databases.txt. And ACCESS_MODE in the cubrid_broker.conf file must be specified; if it is omitted, the default value is configured to Read Write mode. If you want to divide into a separate device, you must configure cubrid_broker.conf and databases.txt in the broker device.

databases.txt

#db-name vol-path db-host log-path lob-base-path testdb /home1/cubrid1/CUBRID/testdb nodeA:nodeB /home1/cubrid1/CUBRID/testdb/log file:/home1/cubrid1/CUBRID/testdb/lob

cubrid_broker.conf

[%testdb_RWbroker] SERVICE =ON BROKER_PORT =33000 MIN_NUM_APPL_SERVER =5 MAX_NUM_APPL_SERVER =40 APPL_SERVER_SHM_ID =33000 LOG_DIR =log/broker/sql_log ERROR_LOG_DIR =log/broker/error_log SQL_LOG =ON TIME_TO_KILL =120 SESSION_TIMEOUT =300 KEEP_CONNECTION =AUTO CCI_DEFAULT_AUTOCOMMIT =ON # broker mode parameter ACCESS_MODE =RW

Starting Broker and Verifying its Status

A broker is used to access applications such as JDBC, CCI or PHP. Therefore, to simply test server redundancy, execute the CSQL interpreter that is directly connected to the server processes, without having to start a broker. To start a broker, execute cubrid broker start. To stop it, execute cubrid broker stop.

The following example shows how to execute a broker from the master node.

[nodeA]$ cubrid broker start

@ cubrid broker start

++ cubrid broker start: success

[nodeA]$ cubrid broker status

@ cubrid broker status

% testdb_RWbroker

---------------------------------------------------------

ID PID QPS LQS PSIZE STATUS

---------------------------------------------------------

1 9532 0 0 48120 IDLE

Configuring Applications

Specifies the host name (nodeA_broker, nodeB_broker) and port for an application to connect in the connection URL. The altHosts attribute defines the broker where the next connection will be made when the connection to a broker fails. The following is an example of a JDBC program. For more information on CCI and PHP, see CCI Configuration and PHP Configuration.

Connection connection = DriverManager.getConnection("jdbc:CUBRID:nodeA_broker:33000:testdb:::?charSet=utf-8&altHosts=nodeB_broker:33000", "dba", "");

Environment Configuration¶

The below is the description for setting the HA environment. See Connecting a Broker to DB for further information regarding the process connecting between a broker and a DB server.

cubrid.conf¶

The cubrid.conf file that has general information on configuring CUBRID is located in the $CUBRID/conf directory. This page provides information about cubrid.conf parameters used by CUBRID HA.

HA or Not¶

ha_mode

ha_mode is a parameter used to configure whether to use CUBRID HA. The default value is off. CUBRID HA does not support Windows; it supports Linux only.

- off : CUBRID HA is not used.

- on : CUBRID HA is used. Failover is supported for its node.

- replica : CUBRID HA is used. Failover is not supported for its node.

The ha_mode parameter can be re-configured in the [@<database>] section; however, only off can be entered in the case. An error is returned if a value other than off is entered in the [@<database>] section.

If ha_mode is on, the CUBRID HA values are configured by reading cubrid_ha.conf.

This parameter cannot be modified dynamically. To modify the value of this parameter, you must restart it.

Logging¶

log_max_archives

log_max_archives is a parameter used to configure the minimum number of archive log files to be archived. The minimum value is 0 and the default is INT_MAX (2147483647). When CUBRID has installed for the first time, this value is set to 0 in the cubrid.conf file. The behavior of the parameter is affected by force_remove_log_archives.

If the value of force_remove_log_archives is set to no, the existing archive log files to which the activated transaction refers or the archive log files of the master node not reflected to the slave node in HA environment will not be deleted. For details, see the following force_remove_log_archives.

For details about log_max_archives, see Logging-Related Parameters.

force_remove_log_archives

It is recommended to configure force_remove_log_archives to no so that archive logs to be used by HA-related processes always can be maintained to set up HA environment by configuring ha_mode to on.

If you configure the value for force_remove_log_archives to yes, the archive log files which will be used in the HA-related process can be deleted, and this may lead to an inconsistency between replicated databases. If you want to maintain free disk space even though doing this could lead to risk, you can configure the value to yes.

For details about force_remove_log_archives, see Logging-Related Parameters.

Note

From 2008 R4.3 in replica mode, it will be always deleted except for archive logs as many as specified in the log_max_archives parameter, regardless the force_remove_log_archives value specified.

Access¶

max_clients

max_clients is a parameter used to configure the maximum number of clients to be connected to a database server simultaneously. The default is 100.

Because the replication log copy and the replication log reflection processes start by default if CUBRID HA is used, you must configure the value to twice the number of all nodes in the CUBRID HA group, except the corresponding node. Furthermore, you must consider the case in which a client that is connected to another node at the time of failover attempts to connect to that node.

For details about max_clients, see Connection-Related Parameters.

The Parameters That Must Have the Same Value for All Nodes

- log_buffer_size : The size of a log buffer. This must be same for all nodes, as it affects the protocol between copylogdb that duplicate the server and logs.

- log_volume_size : The size of a log volume. In CUBRID HA, the format and contents of a transaction log are the same as that of the replica log. Therefore, the parameter must be same for all nodes. If each node creates its own DB, the cubrid createdb options (--db-volume-size, --db-page-size, --log-volume-size, --log-page-size, etc.) must be the same.

- cubrid_port_id : The TCP port number for creating a server connection. It must be same for all nodes in order to connect copylogdb that duplicate the server and logs.

- HA-related parameters : HA parameters included in cubrid_ha.conf must be identical by default. However, the following parameters can be set differently according to the node.

The Parameters That Can be Different Among Nodes

- The ha_mode parameter in replica node

- The ha_copy_sync_mode parameter

- The ha_ping_hosts parameter

Example

The following example shows how to configure cubrid.conf. Please take caution when configuring log_max_archives and force_remove_log_archives (logging-related parameters), and ha_mode (an HA-related parameter).

# Service Parameters

[service]

service=server,broker,manager

# Server Parameters

server=testdb

data_buffer_size=512M

log_buffer_size=4M

sort_buffer_size=2M

max_clients=200

cubrid_port_id=1523

db_volume_size=512M

log_volume_size=512M

# Adds when configuring HA (Logging parameters)

log_max_archives=100

force_remove_log_archives=no

# Adds when configuring HA (HA mode)

ha_mode=on

log_max_archives=100

cubrid_ha.conf¶

The cubrid_ha.conf file that has generation information on CUBRID HA is located in the $CUBRID/conf directory. CUBRID HA does not support Windows; it supports Linux only.

See Connecting a Broker to DB for further information regarding the process connecting between a broker and a DB server.

Node¶

ha_node_list

ha_node_list is a parameter used to configure the group name to be used in the CUBRID HA group and the host name of member nodes in which failover is supported. The group name is separated by @. The name before @ is for the group, and the names after @ are for host names of member nodes. A comma(,) or colon(:) is used to separate individual host names. The default is localhost@localhost.

Note

The host name of the member nodes specified in this parameter cannot be replaced with the IP. You should use the host names which are registered in /etc/hosts.

If the host name is not specified properly, the below message is written into the server.err error log file.

Time: 04/10/12 17:49:45.030 - ERROR *** file ../../src/connection/tcp.c, line 121 ERROR CODE = -353 Tran = 0, CLIENT = (unknown):(unknown)(-1), EID = 1 Cannot make connection to master server on host "Wrong_HOST_NAME".... Connection timed out

A node in which the ha_mode value is set to on must be specified in ha_node_list. The value of the ha_node_list of all nodes in the CUBRID HA group must be identical. When a failover occurs, a node becomes a master node in the order specified in the parameter.

This parameter can be modified dynamically. If you modify the value of this parameter, you must execute cubrid heartbeat reload to apply the changes.

ha_replica_list

ha_replica_list is a parameter used to configure the group name, which is used in the CUBRID HA group, and the replica nodes, which are host names of member nodes in which failover is not supported. There is no need to specify this if you do not construct replica nodes. The group name is separated by @. The name before @ is for the group, and the names after @ are for host names of member nodes. A comma(,) or colon(:) is used to separate individual host names. The default is NULL.

The group name must be identical to the name specified in ha_replica_list. The host names of member nodes and the host names of nodes specified in this parameter must be registered in /etc/hosts. A node in which the ha_mode value is set to replica must be specified in ha_replica_list. The ha_replica_list values of all nodes in the CUBRID HA group must be identical.

This parameter can be modified dynamically. If you modify the value of this parameter, you must execute cubrid heartbeat reload to apply the changes.

Note

The host name of the member nodes specified in this parameter cannot be replaced with the IP. You should use the host names which are registered in /etc/hosts.

ha_db_list

ha_db_list is a parameter used to configure the name of the database that will run in CUBRID HA mode. The default is NULL. You can specify multiple databases by using a comma (,).

Note

The host name of the member nodes specified in this parameter cannot be replaced with the IP. You should use the host names which are registered in /etc/hosts.

Access¶

ha_port_id

ha_port_id is a parameter used to configure the UDP port number; the UDP port is used to detect failure when exchanging heartbeat messages. The default is 59,901.

If a firewall exists in the service environment, the firewall must be configured to allow the configured port to pass through it.

ha_ping_hosts

ha_ping_hosts is a parameter used to configure the host which verifies whether or not a failover occurs due to unstable network when a failover has started in a slave node. The default is NULL. A comma(,) or colon(:) is used to separate individual host names.

The host name of the member nodes specified in this parameter can be replaced with the IP. When a host name is used, the name must be registered in /etc/hosts.

CUBRID checks hosts specified in ha_ping_hosts every hour; if there is a problem on a host, "ping check" is paused temporarily and checks every 5 minutes if the host is normalized or not.

Configuring this parameter can prevent split-brain, a phenomenon in which two master nodes simultaneously exist as a result of the slave node erroneously detecting an abnormal termination of the master node due to unstable network status and then promoting itself as the new master.

Replication¶

ha_copy_sync_mode

ha_copy_sync_mode is a parameter used to configure the mode of storing the replication log, which is a copy of transaction log. The default is SYNC.

The value can be one of the following: SYNC and ASYNC. The number of values must be the same as the number of nodes specified in ha_node_list. They must be ordered by the specified value. You can specify multiple modes by using a comma(,) or colon(:). The replica node is always working in ASNYC mode regardless of this value.

For details, see Log Multiplexing.

ha_copy_log_base

ha_copy_log_base is a parameter used to configure the location of storing the transaction log copy. The default is $CUBRID_DATABASES/<db_name>_<host_name>.

For details, see Log Multiplexing.

ha_copy_log_max_archives

ha_copy_log_max_archives is a parameter used to configure the maximum number of keeping replication log files. The default is 1. However, even if the number of replication log files exceeds the specified number of replication log files, they are not deleted if they are not applied to the database.

To prevent wasting needless disk space, it is recommended to keep this value as 1, the default.

ha_apply_max_mem_size

ha_apply_max_mem_size is a parameter used to configure the value of maximum memory that the replication log reflection process of CUBRID HA can use. The default and maximum values are 500 (unit: MB). When the value is larger than the size allowed by the system, memory allocation fails and the HA replication reflection process may malfunction. For this reason, you must check whether or not the memory resource can handle the specified value before setting it.

ha_applylogdb_ignore_error_list

ha_applylogdb_ignore_error_list is a parameter used to configure for proceeding replication in CUBRID HA process by ignoring an error occurrence. The error codes to be ignored are separated by a comma (,). This value has a high priority. Therefore, when this value is the same as the value of the ha_applylogdb_retry_error_list parameter or the error code of "List of Retry Errors," the values of the ha_applylogdb_retry_error_list parameter or the error code of "List of Retry Errors" are ignored and the tasks that cause the error are not retried. For "List of Retry Errors," see the description of ha_applylogdb_retry_error_list below.

ha_applylogdb_retry_error_list

ha_applylogdb_retry_error_list is a parameter used to configure for retrying tasks that caused an error in the replication log reflection process of CUBRID HA until the task succeeds. When specifying errors to be retried, separate each error with a comma (,). The following table shows the default "List of Retry Errors." If these values exist in ha_applylogdb_ignore_error_list, the error will be overridden.

List of Retry Errors

Error Code Name Error Code ER_LK_UNILATERALLY_ABORTED -72 ER_LK_OBJECT_TIMEOUT_SIMPLE_MSG -73 ER_LK_OBJECT_TIMEOUT_CLASS_MSG -74 ER_LK_OBJECT_TIMEOUT_CLASSOF_MSG -75 ER_LK_PAGE_TIMEOUT -76 ER_PAGE_LATCH_TIMEDOUT -836 ER_PAGE_LATCH_ABORTED -859 ER_LK_OBJECT_DL_TIMEOUT_SIMPLE_MSG -966 ER_LK_OBJECT_DL_TIMEOUT_CLASS_MSG -967 ER_LK_OBJECT_DL_TIMEOUT_CLASSOF_MSG -968 ER_LK_DEADLOCK_CYCLE_DETECTED -1021

ha_replica_delay

This parameter specifies the term of applying the replicated data between a master node and a replica node. CUBRID intentionally delays replicating by the specified time. You can set a unit as ms, s, min or h, which stands for milliseconds, seconds, minutes or hours respectively. If you omit the unit, milliseconds(ms) will be applied. The default value is 0.

ha_replica_time_bound

In a master node, only the transactions which have been run on the specified time with this parameter are applied to the replica node. The format of this value is "YYYY-MM-DD hh:mi:ss". There is no default value.

Note

The following example shows how to configure cubrid_ha.conf.

[common]

ha_node_list=cubrid@nodeA:nodeB

ha_db_list=testdb

ha_copy_sync_mode=sync:sync

ha_apply_max_mem_size=500

Note

The following example shows how to configure the value of /etc/hosts (a host name of a member node: nodeA, IP: 192.168.0.1).

127.0.0.1 localhost.localdomain localhost

192.168.0.1 nodeA

ha_delay_limit

ha_delay_limit is a standard time for CUBRID itself to measure replication delay status, and ha_delay_limit_delta is a value to subtract a time which replication delay is released from a replication delay time. Once a server is measured as a replication delay, it keeps this status until the replication delay time is equal or lower than (ha_delay_limit - ha_delay_limit_delta). A slave node or a replica node corresponds to a standby DB server, that is a target server to judge whether replication is delayed or not.

For example, if you want set replication delay time as 10 minutes and replication-delay-releasing time as 8 minutes, the value of ha_delay_limit will be 600s(or 10min) and the value of ha_delay_limit_delta will be 120s(or 2min).

If it is measured as replication delay, CAS judges that there is a problem for standby DB to process jobs, and attempts to reconnect the other standby DBs.

CAS, which is connected to the DB which has the lower priority because of the replication delay, expects that the replication delay is released when the time by RECONNECT_TIME in cubrid_broker.conf is elapsed, then attempts to reconnect to the standby DB which has higher priority.

ha_delay_limit_delta

See the above description of ha_delay_limit.

ha_copy_log_timeout

This is the maximum value of the time in which a node's database server process (cub_server) waits a response from another node's replication-log-copy process (copylogdb). The default is 5(seconds). If this value is -1, this means to be infinite wait.

ha_monitor_disk_failure_interval

CUBRID judges the disk failure for each time which is set to the value of this parameter. The default is 30, and the unit is second.

- If the value of ha_copy_log_timeout parameter is -1, the value of ha_monitor_disk_failure_interval parameter is ignored and the disk failure is not judged.

- If the value of ha_monitor_disk_failure_interval parameter is smaller than the value of ha_copy_log_timeout parameter, the disk failure is judged for each ha_copy_log_timeout + 20 seconds.

ha_unacceptable_proc_restart_timediff

When the abnormal status of a server process is kept, the server can be restarted infinitely; it is better to remove this kind of node from the HA components. Because the server is restarted within a short time when the abnormal status is continued, specify this term with this parameter to detect this situation. If the server is restarted within the specified term, CUBRID assumes that this server is abnormal and remove(demote) this node from the HA components. The default is 2min. If the unit is omitted, it is specified as milliseconds(msec).

Prefetch Log¶

The following parameters are used in prefetchlogdb utility.

ha_prefetchlogdb_enable

Specifies whether CUBRID uses prefetchlogdb process or not. The default is no.

ha_prefetchlogdb_max_thread_count

The maximum number of threads which perform prefetching. The default is 4. The proper setting value is about "(Number of CPU core on the server)/2".

If this value is large, CPU usage can be increased rapidly, if this is too small, also prefetching effect can be small.

ha_prefetchlogdb_max_page_count

The number of pages which can be prefetched in maximum. The default is 1000.

SQL Logging¶

ha_enable_sql_logging

If the value of this parameter is yes, CUBRID generates the log file of SQL which aplylogdb process applies to the DB volume. The log file is located under the sql_log of the replication log directory(ha_copy_log_base). The default is no.

The format of this log file name is <db name>_<master hostname>.sql.log.<id>, and <id> starts from 0. If this size is over ha_sql_log_max_size_in_mbytes, a new file with "<id> + 1" is created. For example, if "ha_sql_log_max_size_in_mbytes=100", demodb_nodeA.sql.log.1 is newly created as the size of demodb_nodeA.sql.log.0 file becomes 100MB.

SQL log files are piled up when this parameter is on; therefore, a user should remove log files manually for retaining the free space.

The SQL log format is as follows.

INSERT/DELETE/UPDATE

-- date | SQL id | SELECT query's length for sampling | the length of a transformed query -- SELECT query for sampling transformed query

-- 2013-01-25 15:16:41 | 40083 | 33 | 114 -- SELECT * FROM [t1] WHERE "c1"=79186; INSERT INTO [t1]("c1", "c2", "c3") VALUES (79186,'b3beb3decd2a6be974',0);DDL

-- date | SQL id | 0 | the length of a transformed query DDL query (GRANT query will follow when CREATE TABLE, to grant the authority to the table created with DBA authority.)

-- 2013-01-25 14:22:59 | 1 | 0 | 50 create class t1 ( id integer, primary key (id) ); -- 2013-01-25 14:22:59 | 2 | 0 | 38 GRANT ALL PRIVILEGES ON [t1] TO public;

Warning

When you apply this SQL log from a specific point as creating other DB, triggers should be turned off because the jobs performed by triggers from master node are written to the SQL log file.

- See TRIGGER_ACTION for turning off the triggers with a broker configuration.

- See

csql --no-trigger-actionfor tunning off the triggers when you run CSQL.

ha_sql_log_max_size_in_mbytes

The value of this parameter is the maximum size of the file which is created when SQL applied to DB by applylogdb process is logged. The new file is created when the size of a log file is over this value.

cubrid_broker.conf¶

The cubrid_broker.conf file that has general information on configuring CUBRID broker is located in the $CUBRID/conf directory. This section explains the parameters of cubrid_broker.conf that are used by CUBRID HA.

See Connecting a Broker to DB for further information regarding the process connecting between a broker and a DB server.

Access Target¶

ACCESS_MODE

ACCESS_MODE is a parameter used to configure the mode of a broker. The default is RW.

Its value can be one of the following: RW (Read Write), RO (Read Only), SO (Standby Only), or PHRO (Preferred Host Read Only). For details, see Broker Mode.

REPLICA_ONLY

CAS is only accessed to the replica DB if the value of REPLICA_ONLY is ON. The default is OFF. If the value of REPLICA_ONLY is ON and the value of ACCESS_MODE is RW, writing job is possible to even replica DB.

Access Order¶

CONNECT_ORDER

This parameter specifies whether the host-connecting order from a CAS is sequential or random. The host is configured from db-host of $CUBRID_DATABASES/databases.txt.

The default is SEQ; CAS tries to connect in the order. When this vaule is RANDOM, CAS tries to connect at random. If PREFERRED_HOSTS parameter is specified, CAS tries to connect to the hosts configured in PREFERRED_HOSTS* in the order, then use the value of db-host only when the connection by PREFERRED_HOSTS* fails; and CONNECT_ORDER does not affects on the order of PREFERRED_HOSTS.

If you concern that the connections are centralized into one DB, set this value as RANDOM.

PREFERRED_HOSTS

Specify the order to connect by listing host names. The default value is NULL.

You can specify multiple nodes by using a colon (:). First, it tries to connect to host in the following order: host specified in the PREFERRED_HOSTS parameter first and then host specified in $CUBRID_DATABASES/databases.txt.

The following example shows how to configure cubrid_broker.conf. To access localhost in a first priority, set PREFERRED_HOSTS as localhost.

[%PHRO_broker]

SERVICE =ON

BROKER_PORT =33000

MIN_NUM_APPL_SERVER =5

MAX_NUM_APPL_SERVER =40

APPL_SERVER_SHM_ID =33000

LOG_DIR =log/broker/sql_log

ERROR_LOG_DIR =log/broker/error_log

SQL_LOG =ON

TIME_TO_KILL =120

SESSION_TIMEOUT =300

KEEP_CONNECTION =AUTO

CCI_DEFAULT_AUTOCOMMIT =ON

# Broker mode setting parameter

ACCESS_MODE =RO

PREFERRED_HOSTS =localhost

Access Limitation¶

MAX_NUM_DELAYED_HOSTS_LOOKUP

When replication is delayed on all DB servers in the HA environment which specified multiple DB servers to db-host of databases.txt, CUBRID checks the replication-delayed servers until only the specified numbers in the MAX_NUM_DELAYED_HOSTS_LOOKUP parameter and decides the connection(checking the delay of replication is judged for the standby hosts; the delayed time is decided by the ha_delay_limit parameter). Also, MAX_NUM_DELAYED_HOSTS_LOOKUP is not applied to PREFERRED_HOSTS.

For example, when db-host is specified as "host1:host2:host3:host4:host5" and "MAX_NUM_DELAYED_HOSTS_LOOKUP=2", if the status of them as follows,

- host1: active status

- host2: standby status, replication is delayed

- host3: unable to access

- host4: standby status, replication is delayed

- host5: standby status, replication is not delayed

then the broker tries to access the two hosts, hosts and host4 which their replications are delayed, then decides to access host4.

The reason to behave like the above is that CUBRID assumes that the replication will be delayed to the other hosts if the replication of the number(specified by MAX_NUM_DELAYED_HOSTS_LOOKUP) of hosts are delayed; therefore, CUBRID decides to connect to the last hosts which CUBRID have tried to access, as CUBRID does not try to access for the left hosts. However, if PREFERRED_HOSTS is specified together, CUBRID tries to access to them first and then tries to access to the hosts of db-host list from the first.

The step which the broker access CUBRID is divided into the primary connection and the secondary connection.

The primary connection: the step which the broker tries to access DB at first.

It checks the DB status(active/standby) and whether the replication is delayed or not. At this time, the broker checks DB's status if it's active or standby based on the ACCESS_MODE then decides the connection.

The secondary connection: After the failure of the primary connection, the broker tries to connect from the failed position. At this time, the broker ignores the DB status(active/standby) and the delay of replication. However, SO broker alway accepts a connection only to a standby DB.

At this time, the connection is decided if the DB is accessible, by ignoring the delay of replication and DB status(active/standby). However, the error can occur during the query execution. For example, If ACCESS_MODE of the broker is RW but the broker access standby DB, it occurs an error during INSERT operation. Regardless of the error, after it is connected to a standby DB and the transaction is executed, the broker retries the primary connection. However, SO broker can never connect to the active DB.

Depending on the value of MAX_NUM_DELAYED_HOSTS_LOOKUP, how the number of hosts, attempting to connect is limited as follows.

MAX_NUM_DELAYED_HOSTS_LOOKUP=-1

The same as you do not specify this parameter, which is the default value. In this case, at the primary step, the delay of replication and the DB status are checked to the end, then the connection is decided. At the secondary step, even if there is a replication, or even if that is not the expected DB status(active/standby), the broker connects to the last host which was accessible.

MAX_NUM_DELAYED_HOSTS_LOOKUP=0

The secondary connection is processed after the connection is tried only to PREFERRED_HOSTS at the primary step; and at the secondary step, the broker tries to connect to a host even it is delayed in replication or it is not an expected DB status(active/standby). That is, because it is the secondary connection, RW broker can connect to a standby host and RO broker can connect to an active host. However, SO broker can never connect to the active DB.

MAX_NUM_DELAYED_HOSTS_LOOKUP=n(>0)

The broker tries to connect until the specified number of replication-delayed hosts. At the primary connection, the broker inspects until the specified number of replication-delayed hosts; at the secondary connection, the broker connects to a host that there is a delay of replication.

Reconnection¶

RECONNECT_TIME

When a broker tries to connect a DB server which are not in PREFERRED_HOSTS, RO broker tries to connect to active DB server, or a broker tries to connect to the replication-delayed DB server, if connecting time is over RECONNECT_TIME(default: 10min), the broker tries to reconnect.

See RECONNECT_TIME for further information.

databases.txt¶

The databases.txt file has information on the order of servers for the CAS of a broker to connect. It is located in the $CUBRID_DATABASES (if not specified, $CUBRID/databases) directory; the information can be configured by using db_hosts. You can specify multiple nodes by using a colon (:). If "CONNECT_ORDER**=**RANDOM", the connection order is decided as randomly. But if PREFERRED_HOSTS is specified, the specified hosts have the first priority of the connection order.

The following example shows how to configure databases.txt.

#db-name vol-path db-host log-path lob-base-path

testdb /home/cubrid/DB/testdb nodeA:nodeB /home/cubrid/DB/testdb/log file:/home/cubrid/DB/testdb/lob

JDBC Configuration¶

To use CUBRID HA in JDBC, you must specify the connection information of another broker (nodeB_broker) to be connected when a failure occurs in broker (nodeA_broker). The attribute configured for CUBRID HA is altHosts which represents information of one or more broker nodes to be connected. For details, see Configuration Connection.

The following example shows how to configure JDBC:

Connection connection = DriverManager.getConnection("jdbc:CUBRID:nodeA_broker:33000:testdb:::?charSet=utf-8&altHosts=nodeB_broker:33000", "dba", "");

CCI Configuration¶

To use CUBRID HA in CCI, you must use the cci_connect_with_url() function which additionally allows specifying connection information in connection URL; the connection information is used when a failure occurs in broker. The attribute configured for CUBRID HA is altHosts which represents information of one or more broker nodes to be connected.

The following example shows how to configure CCI.

con = cci_connect_with_url ("cci:CUBRID:nodeA_broker:33000:testdb:::?altHosts=nodeB_broker:33000", "dba", NULL);

if (con < 0)

{

printf ("cannot connect to database\n");

return 1;

}

PHP Configuration¶

To use the functions of CUBRID HA in PHP, connect to the broker by using cubrid_connect_with_url , which is used to specify the connection information of the failover broker in the connection URL. The attribute specified for CUBRID HA is altHosts, the information on one or more broker nodes to be connected when a failover occurs.

The following example shows how to configure PHP.

<?php

$con = cubrid_connect_with_url ("cci:CUBRID:nodeA_broker:33000:testdb:::?altHosts=nodeB_broker:33000", "dba", NULL);

if ($con < 0)

{

printf ("cannot connect to database\n");

return 1;

}

?>

Note

If you want to run smoothly the broker's failover in the environment which the broker's failover is enabled by setting altHosts, you should set the value of disconnectOnQueryTimeout in URL as true.

If this value is true, an application program releases the existing connection from a broker and reconnects to the other broker which is specified on altHosts.

Connecting a Broker to DB¶

A broker in HA environment should decide the one DB server to connect among multiple DB servers. At this time, it is different depending on the setting of the DB server and broker; how to connect to the DB server and what DB server should be chosen. In this chapter, we will look over how a broker choose DB server by the setting of HA environment. See Environment Configuration for the description about each parameters used in the environment setting.

Here are the main parameters used in the DB connection with the broker.

| Location | Configuration file | Parameter name | Description |

|---|---|---|---|

| DB server | cubrid.conf | ha_mode | HA mode(on/off/replica) of DB server. Default: off |

| cubrid_ha.conf | ha_delay_limit | A period to determine whether the replication-delay | |

| ha_delay_limit_delta | Time subtracting the resolution time of replication-delay from the time of replication-delay | ||

| Broker | cubrid_broker.conf | ACCESS_MODE | Broker mode(RW/RO/SO). Default: RW |

| REPLICA_ONLY | Connectible to REPLICA server or not(ON/OFF). Default: OFF | ||

| PREFERRED_HOSTS | Connecting to the host that is specified here in priority to the host that you set in the db-host of databases.txt | ||

| MAX_NUM_DELAYED_HOSTS_LOOKUP | The number of hosts to determine the delay of replication in databases.txt. If up to the specified number of hosts was determined as the delay of replication, the broker is connected to the host checked at last.

|

||

| RECONNECT_TIME | Time to try reconnecting after the broker is connected to the improper DB server. Default: 600s. If this value is 0, no try for reconnection. | ||

| CONNECT_ORDER | A parameter specifying the connecting order whether to connect as or the random order(SEQ/RANDOM). Default: SEQ |

Connection Process¶

When a broker accesses DB server, it tries the primary connection; if it fails, it tries the secondary connection.

- The primary connection: Check the DB status(active/standby) and the delay of replication.

- A broker tries to connect as the order specified by PREFERRED_HOSTS. The broker rejects connecting to the improper DB of which the status does not match with ACCESS_MODE or in which the replication is delayed.

- By the CONNECT_ORDER, a broker tries to connect to the host in the order specified in databases.txt or the random order. The broker checks the DB status followed by the ACCESS_MODE and checks the replication-delayed host up to the number specified in MAX_NUM_DELAYED_HOSTS_LOOKUP.

- The secondary connection: Ignore the DB status(active/standby) and the delay of replication. However, SO broker always accepts to connect only to standby DB.

- A broker tries to connect as the order specified by PREFERRED_HOSTS. The broker accepts connecting to the improper DB of which status does not match with ACCESS_MODE or in which the replication is delayed. However, SO broker can never connect to active DB.

- By the CONNECT_ORDER, a broker tries to connect to the host in the order specified in databases.txt or the random order. The broker ignores the DB status(active/standby) and the delay of replication; it is connected if possible.

Samples of Behaviors by Configuration¶

The following shows the example of configuration.

Host DB status

- host1: active

- host2: standby, replication is delayed.

- host3: standby, replica, unable to access.

- host4: standby, replica, replication is delayed.

- host5: standby, replica, replication is delayed.

When the status of host DBs are as the above, the below shows samples of behaviors by the configuration.

Behaviors by configuration

- 2-1, 2-2, 2-3: From 2, (+) is addition and (#) is modification.

- 3-1, 3-2, 3-3: From 3, (+) is addition and (#) is modification.

| No. | Configuration | Behavior |

|---|---|---|

| 1 |

|

At the primary connection try, a broker checks if DB status is active.

The broker tries to reconnect after the RECONNECT_TIME because it did not connect to PREFERRED_HOSTS. |

| 2 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker ignores DB status and replication-delay.

Because the broker accessed the replication-delayed server, it tries to reconnect after RECONNECT_TIME. |

| 2-1 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker ignores DB status and replication-delay.

Because the broker accessed the active server, it tries to reconnect after RECONNECT_TIME. |

| 2-2 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker ignores DB status and replication-delay.

Because the broker accessed the active server, it tries to reconnect after RECONNECT_TIME. |

| 2-3 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker ignores DB status and replication-delay.

Because the broker accessed the replication-delayed server, it tries to reconnect after RECONNECT_TIME. |

| 3 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker checks if DB status is standby but ignores replication-delay.

Because the broker accessed the replication-delayed server, it tries to reconnect after RECONNECT_TIME. |

| 3-1 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker checks if DB status is standby but ignores replication-delay.

Because the broker accessed the replication-delayed server, it tries to reconnect after RECONNECT_TIME. |

| 3-2 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker checks if DB status is standby but ignores replication-delay.

Because the broker accessed the replication-delayed server, it tries to reconnect after RECONNECT_TIME. |

| 3-3 |

|

At the primary connection try, a broker checks if DB status is standby.

At the secondary connection try, a broker checks if DB status is standby but ignores replication-delay.

Because the broker accessed the replication-delayed server, it tries to reconnect after RECONNECT_TIME. |

Running and Monitoring¶

cubrid heartbeat Utility¶

cubrid heartbeat command can be run as cubrid hb, the abbreviated command.

start¶

This utility is used to activate CUBRID HA feature and start all processes of CUBRID HA in the node(database server process, replication log copy process, and replication log reflection process). Note that a master node or a slave node is determined based on the execution order of cubrid heartbeat start.

How to execute the command is as shown below.

$ cubrid heartbeat start

The database server process configured in HA mode cannot be started with the cubrid server start command.

Specify the database name at the end of the command to run only the HA configuration processes (database server process, replication log copy process, and replication log reflection process) of a specific database in the node. For example, use the following command to run the database testdb only:

$ cubrid heartbeat start testdb

stop¶

This utility is used to disable and stop all components of CUBRID. The node that executes this command stops and a failover occurs to the next slave node according to the CUBRID HA configuration.

How to use this utility is as shown below.

$ cubrid heartbeat stop

The database server process cannot be stopped with the cubrid server stop command.

Specify the database name at the end of the command to stop only the HA configuration processes (database server process, replication log copy process, and replication log reflection process) of a specific database in the node. For example, use the following command to run the database testdb only:

$ cubrid heartbeat stop testdb

If you want to deactivate CUBRID HA feature immediately, add -i option into the "cubrid heartbeat stop" command. This option is used when the speedy quitting is required because the DB server process is working improperly.

$ cubrid heartbeat stop -i

or

$cubrid heartbeat stop --immediately

copylogdb¶

This utility is used to start or stop the copylogdb process that copies the transaction logs for the db_name of a specific peer_node in the CUBRID HA configuration. You can pause log copy for rebuilding replications in the middle of operation and then rerun it whenever you want.

Even though only the cubrid heartbeat copylogdb start command has succeeded, the functions of detecting and recovering the failure between the nodes are executed. Since the node is the target of failover, the slave node can be changed to the master node.

How to use this utility is as shown below.

$ cubrid heartbeat copylogdb <start|stop> db_name peer_node

When nodeB is a node to run a command and nodeA is peer_node, you can run the command as follows.

[nodeB]$ cubrid heartbeat copylogdb stop testdb nodeA

[nodeB]$ cubrid heartbeat copylogdb start testdb nodeA

When the copylogdb process is started/stopped, the configuration information of the cubrid_ha.conf is used. We recommend that you do not change the configuration as possible after you have set the configuration once. If you need to change it, it is recommended to restart the whole nodes.

applylogdb¶

This utility is used to start or stop the copylogdb process that reflects the transaction logs for the db_name of a specific peer_node in the CUBRID HA configuration. You can pause log copy for rebuilding replications in the middle of operation and then rerun it whenever you want.

Even though only the cubrid heartbeat copylogdb start command has succeeded, the functions of detecting and recovering the failure between the nodes are executed. Since the node is the target of failover, the slave node can be changed to the master node.

How to use this utility is as shown below.

$ cubrid heartbeat applylogdb <start|stop> db_name peer_node

When nodeB is a node to run a command and nodeA is peer_node, you can run the command as follows.

[nodeB]$ cubrid heartbeat applylogdb stop testdb nodeA

[nodeB]$ cubrid heartbeat applylogdb start testdb nodeA

When the applylogdb process is started/stopped, the configuration information of the cubrid_ha.conf is used. We recommend that you do not change the configuration as possible after you have set the configuration once. If you need to change it, it is recommended to restart the whole nodes.

reload¶

This utility is used to retrieve the CUBRID HA information again. To start/stop HA replication processes of the added/removed nodes at once, you can use "cubrid heartbeat replication start/stop" command.

How to use this utility is as shown below.

$ cubrid heartbeat reload

Reconfigurable parameters are ha_node_list and ha_replica_list. Even if an error occurs on a special node during running this command, the left jobs are continued. After reload command is finished, check if the reconfiguration of nodes is applied well or not. If it fails, find the reason and resolve it.

replication(or repl) start¶

This utility is used to run in batch HA processes(copylogdb/applylogdb) related to a specific node; in general, it is used to run in batch HA replication processes of added nodes after running cubrid heartbeat reload.

replication command can be abbreviated by repl.

cubrid heartbeat repl start <node_name>

- node_name: one of nodes specified in ha_node_list of cubrid_ha.conf.

replication(or repl) stop¶

This utility is used to stop in batch HA processes(copylogdb/applylogdb) related to a specific node; in general, it is used to stop in batch HA replication processes of removed nodes after running cubrid heartbeat reload.

replication command can be abbreviated by repl.

cubrid heartbeat repl stop <node_name>

- node_name: one of nodes specified in ha_node_list of cubrid_ha.conf.

status¶

This utility is used to output the information of CUBRID HA group and CUBRID HA components. How to use this utility is as shown below.

$ cubrid heartbeat status

@ cubrid heartbeat status

HA-Node Info (current nodeB, state slave)

Node nodeB (priority 2, state slave)

Node nodeA (priority 1, state master)

HA-Process Info (master 2143, state slave)

Applylogdb testdb@localhost:/home/cubrid/DB/testdb_nodeB (pid 2510, state registered)

Copylogdb testdb@nodeA:/home/cubrid/DB/testdb_nodeA (pid 2505, state registered)

Server testdb (pid 2393, state registered_and_standby)

Note

act, deact, and deregister commands which were used in versions lower than CUBRID 9.0 are no longer used.

prefetchlogdb¶

prefetchlogdb utility performs the job to read the indexes of replicated logs and data pages which will be applied to a slave node or a replica node by applylogdb process in advance, and to load them into the database buffer. Therefore, if you start this utility, applied speed of replicated logs in applylogdb process is improved.

To run prefetchlogdb process, the value of ha_prefetchlogdb_enable parameter in cubrid_ha.conf should be yes (Default: no). If the value of ha_prefetchlogdb_enable parameter is yes, prefetchlogdb will be started together with copylogdb and applylogdb when cubrid heartbeat start command is executed.

If you want to start/stop prefetchlogdb process only, do not specify the value of ha_prefetchlogdb_enable or specify this value as no. The usage is as follows.

cubrid heartbeat prefetchlogdb [start/stop] database host

- start: Start prefetchlogdb process

- stop: Stop prefetchlogdb process

- database: Target database name to run prefetchlogdb process

- host: Target host name to run prefetchlogdb process

Registering HA to cubrid service¶

If you register heartbeat to CUBRID service, you can use the utilities of cubrid service to start, stop or check all the related processes at once. The processes specified by service parameter in [service] section in cubrid.conf file are registered to CUBRID service. If this parameter includes heartbeat, you can start/stop all the service processes and the HA-related processes by using cubrid service start / stop command.

How to configure cubrid.conf file is shown below.

# cubrid.conf

...

[service]

...

service=broker,heartbeat

...

[common]

...

ha_mode=on

cubrid applyinfo¶

This utility is used to check the copied and applied status of replication logs by CUBRID HA.

cubrid applyinfo [option] <database-name>

- database-name : Specifies the name of a server to monitor. A node name is not included.

The following shows the [options] used on cubrid applyinfo.

-

-r,--remote-host-name=HOSTNAME¶ Configures the name of a target node in which transaction logs are copied. Using this option will output the information of active logs (Active Info.) of a target node.

-

-a,--applied-info¶ Outputs the information of replication reflection of a node executing cubrid applyinfo. The -L option is required to use this option.

-

-L,--copied-log-path=PATH¶ Configures the location of transaction logs copied from the other node. Using this option will output the information of transaction logs copied (Copied Active Info.) from the other node.

-

-p,--pageid=ID¶ Outputs the information of a specific page in the copied logs. This is available only when the -L option is enabled. The default is 0, it means the active page.

-

-v¶ Outputs detailed information.

-

-i,--interval=SECOND¶ Outputs the copied status and applied status of transaction logs per specified seconds. To see the delayed status of the replicated log, this option is mandatory.

Example

The following example shows how to check log information (Active Info.) of the master node, the status information of log copy (Copied Active Info.) of the slave node, and the applylogdb info (Applied Info.) of the slave node by executing applyinfo in the slave node.

- Applied Info.: Shows the status information after the slave node applies the replication log.

- Copied Active Info.: Shows the status information after the slave node copies the replication log.

- Active Info.: Shows the status information after the master node records the transaction log.

- Delay in Copying Active Log: Shows the status information which the transaction logs' copy is delayed.

- Delay in Applying Copied Log: Shows the status information which the transaction logs' application is delayed.

[nodeB] $ cubrid applyinfo -L /home/cubrid/DB/testdb_nodeA -r nodeA -a -i 3 testdb

*** Applied Info. ***

Insert count : 289492

Update count : 71192

Delete count : 280312

Schema count : 20

Commit count : 124917

Fail count : 0

*** Copied Active Info. ***

DB name : testdb

DB creation time : 04:29:00.000 PM 11/04/2012 (1352014140)

EOF LSA : 27722 | 10088

Append LSA : 27722 | 10088

HA server state : active

*** Active Info. ***

DB name : testdb

DB creation time : 04:29:00.000 PM 11/04/2012 (1352014140)

EOF LSA : 27726 | 2512

Append LSA : 27726 | 2512

HA server state : active

*** Delay in Copying Active Log ***

Delayed log page count : 4

Estimated Delay : 0 second(s)

*** Delay in Applying Copied Log ***

Delayed log page count : 1459

Estimated Delay : 22 second(s)

The items shown by each status are as follows:

- Applied Info.

- Committed page: The information of committed pageid and offset of a transaction reflected last through replication log reflection process. The difference between this value and the EOF LSA of "Copied Active Info. represents the amount of replication delay.

- Insert Count: The number of Insert queries reflected through replication log reflection process.

- Update Count: The number of Update queries reflected through replication log reflection process.

- Delete Count: The number of Delete queries reflected through replication log reflection process.

- Schema Count: The number of DDL statements reflected through replication log reflection process.

- Commit Count: The number of transactions reflected through replication log reflection process.

- Fail Count: The number of DML and DDL statements in which log reflection through replication log reflection process fails.

- Copied Active Info.

- DB name: Name of a target database in which the replication log copy process copies logs

- DB creation time: The creation time of a database copied through replication log copy process

- EOF LSA: Information of pageid and offset copied at the last time on the target node by the replication log copy process. There will be a delay in copying logs as much as difference with the EOF LSA value of "Active Info." and with the Append LSA value of "Copied Active Info."

- Append LSA: Information of pageid and offset written at the last time on the disk by the replication log copy process. This value can be less than or equal to EOF LSA. There will be a delay in copying logs as much as difference between the EOF LSA value of "Copied Active Info." and this value.

- HA server state: Status of a database server process which replication log copy process receives logs from. For details on status, see Servers.

- Active Info.

- DB name: Name of a database whose node was configured in the -r option.

- DB creation time: Database creation time of a node that is configured in the -r option.

- EOF LSA: The last information of pageid and offset of a database transaction log of a node that is configured in the -r option. There will be a delay in copying logs as much as difference between the EOF LSA value of "Copied Active Info." and this value.

- Append LSA: Information of pageid and offset written at the last time on the disk by the database whose node was configured in the -r option.

- HA server state: The server status of a database server whose node was configured in the -r option.

- Delay in Copying Active Log

- Delayed log page count: the count of transaction log pages which the copy is delayed.

- Estimated Delay: the expected time which the logs copying is completed.

- Delay in Applying Copied Log

- Delayed log page count: the count of transaction log pages which the application is delayed.

- Estimated Delay: the expected time which the logs applying is completed.

When you run this command in replica node, if "ha_replica_delay=30s" is specified in cubrid.conf, the following information is printed out additionally.

*** Replica-specific Info. ***

Deliberate lag : 30 second(s)

Last applied log record time : 2013-06-20 11:20:10

Each item of the status information is as below.

- Replica-specific Info.

- Deliberate lag: delayed time a user defined by ha_replica_delay parameter

- Last applied log record time: the time where the replication log of being applied in the replica node recently was actually applied in the master node.